mirror of

https://github.com/shivammehta25/Matcha-TTS.git

synced 2026-02-05 02:09:21 +08:00

Compare commits

115 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

bd4d90d932 | ||

|

|

108906c603 | ||

|

|

354f5dc69f | ||

|

|

8e5f98476e | ||

|

|

7e499df0b2 | ||

|

|

0735e653fc | ||

|

|

f9843cfca4 | ||

|

|

289ef51578 | ||

|

|

7a65f83b17 | ||

|

|

7275764a48 | ||

|

|

863bfbdd8b | ||

|

|

4bc541705a | ||

|

|

a3fea22988 | ||

|

|

d56f40765c | ||

|

|

b0ba920dc1 | ||

|

|

a220f283e3 | ||

|

|

1df73ef43e | ||

|

|

404b045b65 | ||

|

|

7cfae6bed4 | ||

|

|

a83fd29829 | ||

|

|

c8178bf2cd | ||

|

|

8b1284993a | ||

|

|

0000f93021 | ||

|

|

c2569a1018 | ||

|

|

bd058a68f7 | ||

|

|

362ba2dce7 | ||

|

|

77804265f8 | ||

|

|

d31cd92a61 | ||

|

|

068d135e20 | ||

|

|

bd37d03b62 | ||

|

|

ac0b258f80 | ||

|

|

de910380bc | ||

|

|

aa496aa13f | ||

|

|

e658aee6a5 | ||

|

|

d816c40e3d | ||

|

|

4b39f6cad0 | ||

|

|

dd9105b34b | ||

|

|

7d9d4cfd40 | ||

|

|

256adc55d3 | ||

|

|

bfcbdbc82e | ||

|

|

fb7b954de5 | ||

|

|

5a52a67cf7 | ||

|

|

39cbd85236 | ||

|

|

47a629f128 | ||

|

|

95ec24b599 | ||

|

|

5a2a893750 | ||

|

|

13ca33fbe5 | ||

|

|

19bea20928 | ||

|

|

8268360674 | ||

|

|

a0bf4e9e9a | ||

|

|

f1e8efdec2 | ||

|

|

4ec245e61e | ||

|

|

dc035a09f2 | ||

|

|

254a8e05ce | ||

|

|

0ed9290c31 | ||

|

|

f39ee6cf3b | ||

|

|

6e71dc8b8f | ||

|

|

ae2417c175 | ||

|

|

6c7a82a516 | ||

|

|

009b09a8b2 | ||

|

|

a18db17330 | ||

|

|

263d5c4d4e | ||

|

|

df896301ca | ||

|

|

c8d0d60f87 | ||

|

|

e540794e7e | ||

|

|

b756809a32 | ||

|

|

1ead4303f3 | ||

|

|

7a29fef719 | ||

|

|

9ace522249 | ||

|

|

ed6e6bbf6c | ||

|

|

51ea36d271 | ||

|

|

269609003b | ||

|

|

2a81800825 | ||

|

|

336dd20d5b | ||

|

|

01c99161c4 | ||

|

|

2c21a0edac | ||

|

|

25767f76a8 | ||

|

|

1b204ed42c | ||

|

|

2cd057187b | ||

|

|

d373e9a5b1 | ||

|

|

f12be190a4 | ||

|

|

be998ae31f | ||

|

|

a49af19f48 | ||

|

|

7850aa0910 | ||

|

|

309739b7cc | ||

|

|

0aaabf90c4 | ||

|

|

c5dab67d9f | ||

|

|

d098f32730 | ||

|

|

281a098337 | ||

|

|

db95158043 | ||

|

|

267bf96651 | ||

|

|

72635012b0 | ||

|

|

9ceee279f0 | ||

|

|

d7b9a37359 | ||

|

|

ec43ef0732 | ||

|

|

a38b065393 | ||

|

|

8e0425de68 | ||

|

|

fc7377fe7e | ||

|

|

b3a7305bf9 | ||

|

|

1a376883e3 | ||

|

|

582407b699 | ||

|

|

54421b3ced | ||

|

|

bd55eb76f2 | ||

|

|

a9251ed984 | ||

|

|

c079e5254a | ||

|

|

0554a5b87c | ||

|

|

ed1c9d87b5 | ||

|

|

acb57a3857 | ||

|

|

88bc7d05eb | ||

|

|

f37918d9d2 | ||

|

|

e960632fb0 | ||

|

|

772d8e69b4 | ||

|

|

8458c86075 | ||

|

|

a90a5f4ac1 | ||

|

|

f016784049 |

6

.env.example

Normal file

6

.env.example

Normal file

@@ -0,0 +1,6 @@

|

|||||||

|

# example of file for storing private and user specific environment variables, like keys or system paths

|

||||||

|

# rename it to ".env" (excluded from version control by default)

|

||||||

|

# .env is loaded by train.py automatically

|

||||||

|

# hydra allows you to reference variables in .yaml configs with special syntax: ${oc.env:MY_VAR}

|

||||||

|

|

||||||

|

MY_VAR="/home/user/my/system/path"

|

||||||

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

22

.github/PULL_REQUEST_TEMPLATE.md

vendored

Normal file

@@ -0,0 +1,22 @@

|

|||||||

|

## What does this PR do?

|

||||||

|

|

||||||

|

<!--

|

||||||

|

Please include a summary of the change and which issue is fixed.

|

||||||

|

Please also include relevant motivation and context.

|

||||||

|

List any dependencies that are required for this change.

|

||||||

|

List all the breaking changes introduced by this pull request.

|

||||||

|

-->

|

||||||

|

|

||||||

|

Fixes #\<issue_number>

|

||||||

|

|

||||||

|

## Before submitting

|

||||||

|

|

||||||

|

- [ ] Did you make sure **title is self-explanatory** and **the description concisely explains the PR**?

|

||||||

|

- [ ] Did you make sure your **PR does only one thing**, instead of bundling different changes together?

|

||||||

|

- [ ] Did you list all the **breaking changes** introduced by this pull request?

|

||||||

|

- [ ] Did you **test your PR locally** with `pytest` command?

|

||||||

|

- [ ] Did you **run pre-commit hooks** with `pre-commit run -a` command?

|

||||||

|

|

||||||

|

## Did you have fun?

|

||||||

|

|

||||||

|

Make sure you had fun coding 🙃

|

||||||

15

.github/codecov.yml

vendored

Normal file

15

.github/codecov.yml

vendored

Normal file

@@ -0,0 +1,15 @@

|

|||||||

|

coverage:

|

||||||

|

status:

|

||||||

|

# measures overall project coverage

|

||||||

|

project:

|

||||||

|

default:

|

||||||

|

threshold: 100% # how much decrease in coverage is needed to not consider success

|

||||||

|

|

||||||

|

# measures PR or single commit coverage

|

||||||

|

patch:

|

||||||

|

default:

|

||||||

|

threshold: 100% # how much decrease in coverage is needed to not consider success

|

||||||

|

|

||||||

|

|

||||||

|

# project: off

|

||||||

|

# patch: off

|

||||||

17

.github/dependabot.yml

vendored

Normal file

17

.github/dependabot.yml

vendored

Normal file

@@ -0,0 +1,17 @@

|

|||||||

|

# To get started with Dependabot version updates, you'll need to specify which

|

||||||

|

# package ecosystems to update and where the package manifests are located.

|

||||||

|

# Please see the documentation for all configuration options:

|

||||||

|

# https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||||

|

|

||||||

|

version: 2

|

||||||

|

updates:

|

||||||

|

- package-ecosystem: "pip" # See documentation for possible values

|

||||||

|

directory: "/" # Location of package manifests

|

||||||

|

target-branch: "dev"

|

||||||

|

schedule:

|

||||||

|

interval: "daily"

|

||||||

|

ignore:

|

||||||

|

- dependency-name: "pytorch-lightning"

|

||||||

|

update-types: ["version-update:semver-patch"]

|

||||||

|

- dependency-name: "torchmetrics"

|

||||||

|

update-types: ["version-update:semver-patch"]

|

||||||

44

.github/release-drafter.yml

vendored

Normal file

44

.github/release-drafter.yml

vendored

Normal file

@@ -0,0 +1,44 @@

|

|||||||

|

name-template: "v$RESOLVED_VERSION"

|

||||||

|

tag-template: "v$RESOLVED_VERSION"

|

||||||

|

|

||||||

|

categories:

|

||||||

|

- title: "🚀 Features"

|

||||||

|

labels:

|

||||||

|

- "feature"

|

||||||

|

- "enhancement"

|

||||||

|

- title: "🐛 Bug Fixes"

|

||||||

|

labels:

|

||||||

|

- "fix"

|

||||||

|

- "bugfix"

|

||||||

|

- "bug"

|

||||||

|

- title: "🧹 Maintenance"

|

||||||

|

labels:

|

||||||

|

- "maintenance"

|

||||||

|

- "dependencies"

|

||||||

|

- "refactoring"

|

||||||

|

- "cosmetic"

|

||||||

|

- "chore"

|

||||||

|

- title: "📝️ Documentation"

|

||||||

|

labels:

|

||||||

|

- "documentation"

|

||||||

|

- "docs"

|

||||||

|

|

||||||

|

change-template: "- $TITLE @$AUTHOR (#$NUMBER)"

|

||||||

|

change-title-escapes: '\<*_&' # You can add # and @ to disable mentions

|

||||||

|

|

||||||

|

version-resolver:

|

||||||

|

major:

|

||||||

|

labels:

|

||||||

|

- "major"

|

||||||

|

minor:

|

||||||

|

labels:

|

||||||

|

- "minor"

|

||||||

|

patch:

|

||||||

|

labels:

|

||||||

|

- "patch"

|

||||||

|

default: patch

|

||||||

|

|

||||||

|

template: |

|

||||||

|

## Changes

|

||||||

|

|

||||||

|

$CHANGES

|

||||||

164

.gitignore

vendored

Normal file

164

.gitignore

vendored

Normal file

@@ -0,0 +1,164 @@

|

|||||||

|

# Byte-compiled / optimized / DLL files

|

||||||

|

__pycache__/

|

||||||

|

*.py[cod]

|

||||||

|

*$py.class

|

||||||

|

|

||||||

|

# C extensions

|

||||||

|

*.so

|

||||||

|

|

||||||

|

# Distribution / packaging

|

||||||

|

.Python

|

||||||

|

build/

|

||||||

|

develop-eggs/

|

||||||

|

dist/

|

||||||

|

downloads/

|

||||||

|

eggs/

|

||||||

|

.eggs/

|

||||||

|

lib/

|

||||||

|

lib64/

|

||||||

|

parts/

|

||||||

|

sdist/

|

||||||

|

var/

|

||||||

|

wheels/

|

||||||

|

pip-wheel-metadata/

|

||||||

|

share/python-wheels/

|

||||||

|

*.egg-info/

|

||||||

|

.installed.cfg

|

||||||

|

*.egg

|

||||||

|

MANIFEST

|

||||||

|

|

||||||

|

# PyInstaller

|

||||||

|

# Usually these files are written by a python script from a template

|

||||||

|

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||||

|

*.manifest

|

||||||

|

*.spec

|

||||||

|

|

||||||

|

# Installer logs

|

||||||

|

pip-log.txt

|

||||||

|

pip-delete-this-directory.txt

|

||||||

|

|

||||||

|

# Unit test / coverage reports

|

||||||

|

htmlcov/

|

||||||

|

.tox/

|

||||||

|

.nox/

|

||||||

|

.coverage

|

||||||

|

.coverage.*

|

||||||

|

.cache

|

||||||

|

nosetests.xml

|

||||||

|

coverage.xml

|

||||||

|

*.cover

|

||||||

|

*.py,cover

|

||||||

|

.hypothesis/

|

||||||

|

.pytest_cache/

|

||||||

|

|

||||||

|

# Translations

|

||||||

|

*.mo

|

||||||

|

*.pot

|

||||||

|

|

||||||

|

# Django stuff:

|

||||||

|

*.log

|

||||||

|

local_settings.py

|

||||||

|

db.sqlite3

|

||||||

|

db.sqlite3-journal

|

||||||

|

|

||||||

|

# Flask stuff:

|

||||||

|

instance/

|

||||||

|

.webassets-cache

|

||||||

|

|

||||||

|

# Scrapy stuff:

|

||||||

|

.scrapy

|

||||||

|

|

||||||

|

# Sphinx documentation

|

||||||

|

docs/_build/

|

||||||

|

|

||||||

|

# PyBuilder

|

||||||

|

target/

|

||||||

|

|

||||||

|

# Jupyter Notebook

|

||||||

|

.ipynb_checkpoints

|

||||||

|

|

||||||

|

# IPython

|

||||||

|

profile_default/

|

||||||

|

ipython_config.py

|

||||||

|

|

||||||

|

# pyenv

|

||||||

|

.python-version

|

||||||

|

|

||||||

|

# pipenv

|

||||||

|

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||||

|

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||||

|

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||||

|

# install all needed dependencies.

|

||||||

|

#Pipfile.lock

|

||||||

|

|

||||||

|

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

||||||

|

__pypackages__/

|

||||||

|

|

||||||

|

# Celery stuff

|

||||||

|

celerybeat-schedule

|

||||||

|

celerybeat.pid

|

||||||

|

|

||||||

|

# SageMath parsed files

|

||||||

|

*.sage.py

|

||||||

|

|

||||||

|

# Environments

|

||||||

|

.venv

|

||||||

|

env/

|

||||||

|

venv/

|

||||||

|

ENV/

|

||||||

|

env.bak/

|

||||||

|

venv.bak/

|

||||||

|

|

||||||

|

# Spyder project settings

|

||||||

|

.spyderproject

|

||||||

|

.spyproject

|

||||||

|

|

||||||

|

# Rope project settings

|

||||||

|

.ropeproject

|

||||||

|

|

||||||

|

# mkdocs documentation

|

||||||

|

/site

|

||||||

|

|

||||||

|

# mypy

|

||||||

|

.mypy_cache/

|

||||||

|

.dmypy.json

|

||||||

|

dmypy.json

|

||||||

|

|

||||||

|

# Pyre type checker

|

||||||

|

.pyre/

|

||||||

|

|

||||||

|

### VisualStudioCode

|

||||||

|

.vscode/*

|

||||||

|

!.vscode/settings.json

|

||||||

|

!.vscode/tasks.json

|

||||||

|

!.vscode/launch.json

|

||||||

|

!.vscode/extensions.json

|

||||||

|

*.code-workspace

|

||||||

|

**/.vscode

|

||||||

|

|

||||||

|

# JetBrains

|

||||||

|

.idea/

|

||||||

|

|

||||||

|

# Data & Models

|

||||||

|

*.h5

|

||||||

|

*.tar

|

||||||

|

*.tar.gz

|

||||||

|

|

||||||

|

# Lightning-Hydra-Template

|

||||||

|

configs/local/default.yaml

|

||||||

|

/data/

|

||||||

|

/logs/

|

||||||

|

.env

|

||||||

|

|

||||||

|

# Aim logging

|

||||||

|

.aim

|

||||||

|

|

||||||

|

# Cython complied files

|

||||||

|

matcha/utils/monotonic_align/core.c

|

||||||

|

|

||||||

|

# Ignoring hifigan checkpoint

|

||||||

|

generator_v1

|

||||||

|

g_02500000

|

||||||

|

gradio_cached_examples/

|

||||||

|

synth_output/

|

||||||

|

/data

|

||||||

59

.pre-commit-config.yaml

Normal file

59

.pre-commit-config.yaml

Normal file

@@ -0,0 +1,59 @@

|

|||||||

|

default_language_version:

|

||||||

|

python: python3.11

|

||||||

|

|

||||||

|

repos:

|

||||||

|

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||||

|

rev: v4.5.0

|

||||||

|

hooks:

|

||||||

|

# list of supported hooks: https://pre-commit.com/hooks.html

|

||||||

|

- id: trailing-whitespace

|

||||||

|

- id: end-of-file-fixer

|

||||||

|

# - id: check-docstring-first

|

||||||

|

- id: check-yaml

|

||||||

|

- id: debug-statements

|

||||||

|

- id: detect-private-key

|

||||||

|

- id: check-toml

|

||||||

|

- id: check-case-conflict

|

||||||

|

- id: check-added-large-files

|

||||||

|

|

||||||

|

# python code formatting

|

||||||

|

- repo: https://github.com/psf/black

|

||||||

|

rev: 23.12.1

|

||||||

|

hooks:

|

||||||

|

- id: black

|

||||||

|

args: [--line-length, "120"]

|

||||||

|

|

||||||

|

# python import sorting

|

||||||

|

- repo: https://github.com/PyCQA/isort

|

||||||

|

rev: 5.13.2

|

||||||

|

hooks:

|

||||||

|

- id: isort

|

||||||

|

args: ["--profile", "black", "--filter-files"]

|

||||||

|

|

||||||

|

# python upgrading syntax to newer version

|

||||||

|

- repo: https://github.com/asottile/pyupgrade

|

||||||

|

rev: v3.15.0

|

||||||

|

hooks:

|

||||||

|

- id: pyupgrade

|

||||||

|

args: [--py38-plus]

|

||||||

|

|

||||||

|

# python check (PEP8), programming errors and code complexity

|

||||||

|

- repo: https://github.com/PyCQA/flake8

|

||||||

|

rev: 7.0.0

|

||||||

|

hooks:

|

||||||

|

- id: flake8

|

||||||

|

args:

|

||||||

|

[

|

||||||

|

"--max-line-length", "120",

|

||||||

|

"--extend-ignore",

|

||||||

|

"E203,E402,E501,F401,F841,RST2,RST301",

|

||||||

|

"--exclude",

|

||||||

|

"logs/*,data/*,matcha/hifigan/*",

|

||||||

|

]

|

||||||

|

additional_dependencies: [flake8-rst-docstrings==0.3.0]

|

||||||

|

|

||||||

|

# pylint

|

||||||

|

- repo: https://github.com/pycqa/pylint

|

||||||

|

rev: v3.0.3

|

||||||

|

hooks:

|

||||||

|

- id: pylint

|

||||||

2

.project-root

Normal file

2

.project-root

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

# this file is required for inferring the project root directory

|

||||||

|

# do not delete

|

||||||

525

.pylintrc

Normal file

525

.pylintrc

Normal file

@@ -0,0 +1,525 @@

|

|||||||

|

[MASTER]

|

||||||

|

|

||||||

|

# A comma-separated list of package or module names from where C extensions may

|

||||||

|

# be loaded. Extensions are loading into the active Python interpreter and may

|

||||||

|

# run arbitrary code.

|

||||||

|

extension-pkg-whitelist=

|

||||||

|

|

||||||

|

# Add files or directories to the blacklist. They should be base names, not

|

||||||

|

# paths.

|

||||||

|

ignore=CVS

|

||||||

|

|

||||||

|

# Add files or directories matching the regex patterns to the blacklist. The

|

||||||

|

# regex matches against base names, not paths.

|

||||||

|

ignore-patterns=

|

||||||

|

|

||||||

|

# Python code to execute, usually for sys.path manipulation such as

|

||||||

|

# pygtk.require().

|

||||||

|

#init-hook=

|

||||||

|

|

||||||

|

# Use multiple processes to speed up Pylint. Specifying 0 will auto-detect the

|

||||||

|

# number of processors available to use.

|

||||||

|

jobs=1

|

||||||

|

|

||||||

|

# Control the amount of potential inferred values when inferring a single

|

||||||

|

# object. This can help the performance when dealing with large functions or

|

||||||

|

# complex, nested conditions.

|

||||||

|

limit-inference-results=100

|

||||||

|

|

||||||

|

# List of plugins (as comma separated values of python modules names) to load,

|

||||||

|

# usually to register additional checkers.

|

||||||

|

load-plugins=

|

||||||

|

|

||||||

|

# Pickle collected data for later comparisons.

|

||||||

|

persistent=yes

|

||||||

|

|

||||||

|

# Specify a configuration file.

|

||||||

|

#rcfile=

|

||||||

|

|

||||||

|

# When enabled, pylint would attempt to guess common misconfiguration and emit

|

||||||

|

# user-friendly hints instead of false-positive error messages.

|

||||||

|

suggestion-mode=yes

|

||||||

|

|

||||||

|

# Allow loading of arbitrary C extensions. Extensions are imported into the

|

||||||

|

# active Python interpreter and may run arbitrary code.

|

||||||

|

unsafe-load-any-extension=no

|

||||||

|

|

||||||

|

|

||||||

|

[MESSAGES CONTROL]

|

||||||

|

|

||||||

|

# Only show warnings with the listed confidence levels. Leave empty to show

|

||||||

|

# all. Valid levels: HIGH, INFERENCE, INFERENCE_FAILURE, UNDEFINED.

|

||||||

|

confidence=

|

||||||

|

|

||||||

|

# Disable the message, report, category or checker with the given id(s). You

|

||||||

|

# can either give multiple identifiers separated by comma (,) or put this

|

||||||

|

# option multiple times (only on the command line, not in the configuration

|

||||||

|

# file where it should appear only once). You can also use "--disable=all" to

|

||||||

|

# disable everything first and then reenable specific checks. For example, if

|

||||||

|

# you want to run only the similarities checker, you can use "--disable=all

|

||||||

|

# --enable=similarities". If you want to run only the classes checker, but have

|

||||||

|

# no Warning level messages displayed, use "--disable=all --enable=classes

|

||||||

|

# --disable=W".

|

||||||

|

disable=missing-docstring,

|

||||||

|

too-many-public-methods,

|

||||||

|

too-many-lines,

|

||||||

|

bare-except,

|

||||||

|

## for avoiding weird p3.6 CI linter error

|

||||||

|

## TODO: see later if we can remove this

|

||||||

|

assigning-non-slot,

|

||||||

|

unsupported-assignment-operation,

|

||||||

|

## end

|

||||||

|

line-too-long,

|

||||||

|

fixme,

|

||||||

|

wrong-import-order,

|

||||||

|

ungrouped-imports,

|

||||||

|

wrong-import-position,

|

||||||

|

import-error,

|

||||||

|

invalid-name,

|

||||||

|

too-many-instance-attributes,

|

||||||

|

arguments-differ,

|

||||||

|

arguments-renamed,

|

||||||

|

no-name-in-module,

|

||||||

|

no-member,

|

||||||

|

unsubscriptable-object,

|

||||||

|

raw-checker-failed,

|

||||||

|

bad-inline-option,

|

||||||

|

locally-disabled,

|

||||||

|

file-ignored,

|

||||||

|

suppressed-message,

|

||||||

|

useless-suppression,

|

||||||

|

deprecated-pragma,

|

||||||

|

use-symbolic-message-instead,

|

||||||

|

useless-object-inheritance,

|

||||||

|

too-few-public-methods,

|

||||||

|

too-many-branches,

|

||||||

|

too-many-arguments,

|

||||||

|

too-many-locals,

|

||||||

|

too-many-statements,

|

||||||

|

duplicate-code,

|

||||||

|

not-callable,

|

||||||

|

import-outside-toplevel,

|

||||||

|

logging-fstring-interpolation,

|

||||||

|

logging-not-lazy,

|

||||||

|

unused-argument,

|

||||||

|

no-else-return,

|

||||||

|

chained-comparison,

|

||||||

|

redefined-outer-name

|

||||||

|

|

||||||

|

# Enable the message, report, category or checker with the given id(s). You can

|

||||||

|

# either give multiple identifier separated by comma (,) or put this option

|

||||||

|

# multiple time (only on the command line, not in the configuration file where

|

||||||

|

# it should appear only once). See also the "--disable" option for examples.

|

||||||

|

enable=c-extension-no-member

|

||||||

|

|

||||||

|

|

||||||

|

[REPORTS]

|

||||||

|

|

||||||

|

# Python expression which should return a note less than 10 (10 is the highest

|

||||||

|

# note). You have access to the variables errors warning, statement which

|

||||||

|

# respectively contain the number of errors / warnings messages and the total

|

||||||

|

# number of statements analyzed. This is used by the global evaluation report

|

||||||

|

# (RP0004).

|

||||||

|

evaluation=10.0 - ((float(5 * error + warning + refactor + convention) / statement) * 10)

|

||||||

|

|

||||||

|

# Template used to display messages. This is a python new-style format string

|

||||||

|

# used to format the message information. See doc for all details.

|

||||||

|

#msg-template=

|

||||||

|

|

||||||

|

# Set the output format. Available formats are text, parseable, colorized, json

|

||||||

|

# and msvs (visual studio). You can also give a reporter class, e.g.

|

||||||

|

# mypackage.mymodule.MyReporterClass.

|

||||||

|

output-format=text

|

||||||

|

|

||||||

|

# Tells whether to display a full report or only the messages.

|

||||||

|

reports=no

|

||||||

|

|

||||||

|

# Activate the evaluation score.

|

||||||

|

score=yes

|

||||||

|

|

||||||

|

|

||||||

|

[REFACTORING]

|

||||||

|

|

||||||

|

# Maximum number of nested blocks for function / method body

|

||||||

|

max-nested-blocks=5

|

||||||

|

|

||||||

|

# Complete name of functions that never returns. When checking for

|

||||||

|

# inconsistent-return-statements if a never returning function is called then

|

||||||

|

# it will be considered as an explicit return statement and no message will be

|

||||||

|

# printed.

|

||||||

|

never-returning-functions=sys.exit

|

||||||

|

|

||||||

|

|

||||||

|

[LOGGING]

|

||||||

|

|

||||||

|

# Format style used to check logging format string. `old` means using %

|

||||||

|

# formatting, while `new` is for `{}` formatting.

|

||||||

|

logging-format-style=old

|

||||||

|

|

||||||

|

# Logging modules to check that the string format arguments are in logging

|

||||||

|

# function parameter format.

|

||||||

|

logging-modules=logging

|

||||||

|

|

||||||

|

|

||||||

|

[SPELLING]

|

||||||

|

|

||||||

|

# Limits count of emitted suggestions for spelling mistakes.

|

||||||

|

max-spelling-suggestions=4

|

||||||

|

|

||||||

|

# Spelling dictionary name. Available dictionaries: none. To make it working

|

||||||

|

# install python-enchant package..

|

||||||

|

spelling-dict=

|

||||||

|

|

||||||

|

# List of comma separated words that should not be checked.

|

||||||

|

spelling-ignore-words=

|

||||||

|

|

||||||

|

# A path to a file that contains private dictionary; one word per line.

|

||||||

|

spelling-private-dict-file=

|

||||||

|

|

||||||

|

# Tells whether to store unknown words to indicated private dictionary in

|

||||||

|

# --spelling-private-dict-file option instead of raising a message.

|

||||||

|

spelling-store-unknown-words=no

|

||||||

|

|

||||||

|

|

||||||

|

[MISCELLANEOUS]

|

||||||

|

|

||||||

|

# List of note tags to take in consideration, separated by a comma.

|

||||||

|

notes=FIXME,

|

||||||

|

XXX,

|

||||||

|

TODO

|

||||||

|

|

||||||

|

|

||||||

|

[TYPECHECK]

|

||||||

|

|

||||||

|

# List of decorators that produce context managers, such as

|

||||||

|

# contextlib.contextmanager. Add to this list to register other decorators that

|

||||||

|

# produce valid context managers.

|

||||||

|

contextmanager-decorators=contextlib.contextmanager

|

||||||

|

|

||||||

|

# List of members which are set dynamically and missed by pylint inference

|

||||||

|

# system, and so shouldn't trigger E1101 when accessed. Python regular

|

||||||

|

# expressions are accepted.

|

||||||

|

generated-members=numpy.*,torch.*

|

||||||

|

|

||||||

|

# Tells whether missing members accessed in mixin class should be ignored. A

|

||||||

|

# mixin class is detected if its name ends with "mixin" (case insensitive).

|

||||||

|

ignore-mixin-members=yes

|

||||||

|

|

||||||

|

# Tells whether to warn about missing members when the owner of the attribute

|

||||||

|

# is inferred to be None.

|

||||||

|

ignore-none=yes

|

||||||

|

|

||||||

|

# This flag controls whether pylint should warn about no-member and similar

|

||||||

|

# checks whenever an opaque object is returned when inferring. The inference

|

||||||

|

# can return multiple potential results while evaluating a Python object, but

|

||||||

|

# some branches might not be evaluated, which results in partial inference. In

|

||||||

|

# that case, it might be useful to still emit no-member and other checks for

|

||||||

|

# the rest of the inferred objects.

|

||||||

|

ignore-on-opaque-inference=yes

|

||||||

|

|

||||||

|

# List of class names for which member attributes should not be checked (useful

|

||||||

|

# for classes with dynamically set attributes). This supports the use of

|

||||||

|

# qualified names.

|

||||||

|

ignored-classes=optparse.Values,thread._local,_thread._local

|

||||||

|

|

||||||

|

# List of module names for which member attributes should not be checked

|

||||||

|

# (useful for modules/projects where namespaces are manipulated during runtime

|

||||||

|

# and thus existing member attributes cannot be deduced by static analysis. It

|

||||||

|

# supports qualified module names, as well as Unix pattern matching.

|

||||||

|

ignored-modules=

|

||||||

|

|

||||||

|

# Show a hint with possible names when a member name was not found. The aspect

|

||||||

|

# of finding the hint is based on edit distance.

|

||||||

|

missing-member-hint=yes

|

||||||

|

|

||||||

|

# The minimum edit distance a name should have in order to be considered a

|

||||||

|

# similar match for a missing member name.

|

||||||

|

missing-member-hint-distance=1

|

||||||

|

|

||||||

|

# The total number of similar names that should be taken in consideration when

|

||||||

|

# showing a hint for a missing member.

|

||||||

|

missing-member-max-choices=1

|

||||||

|

|

||||||

|

|

||||||

|

[VARIABLES]

|

||||||

|

|

||||||

|

# List of additional names supposed to be defined in builtins. Remember that

|

||||||

|

# you should avoid defining new builtins when possible.

|

||||||

|

additional-builtins=

|

||||||

|

|

||||||

|

# Tells whether unused global variables should be treated as a violation.

|

||||||

|

allow-global-unused-variables=yes

|

||||||

|

|

||||||

|

# List of strings which can identify a callback function by name. A callback

|

||||||

|

# name must start or end with one of those strings.

|

||||||

|

callbacks=cb_,

|

||||||

|

_cb

|

||||||

|

|

||||||

|

# A regular expression matching the name of dummy variables (i.e. expected to

|

||||||

|

# not be used).

|

||||||

|

dummy-variables-rgx=_+$|(_[a-zA-Z0-9_]*[a-zA-Z0-9]+?$)|dummy|^ignored_|^unused_

|

||||||

|

|

||||||

|

# Argument names that match this expression will be ignored. Default to name

|

||||||

|

# with leading underscore.

|

||||||

|

ignored-argument-names=_.*|^ignored_|^unused_

|

||||||

|

|

||||||

|

# Tells whether we should check for unused import in __init__ files.

|

||||||

|

init-import=no

|

||||||

|

|

||||||

|

# List of qualified module names which can have objects that can redefine

|

||||||

|

# builtins.

|

||||||

|

redefining-builtins-modules=six.moves,past.builtins,future.builtins,builtins,io

|

||||||

|

|

||||||

|

|

||||||

|

[FORMAT]

|

||||||

|

|

||||||

|

# Expected format of line ending, e.g. empty (any line ending), LF or CRLF.

|

||||||

|

expected-line-ending-format=

|

||||||

|

|

||||||

|

# Regexp for a line that is allowed to be longer than the limit.

|

||||||

|

ignore-long-lines=^\s*(# )?<?https?://\S+>?$

|

||||||

|

|

||||||

|

# Number of spaces of indent required inside a hanging or continued line.

|

||||||

|

indent-after-paren=4

|

||||||

|

|

||||||

|

# String used as indentation unit. This is usually " " (4 spaces) or "\t" (1

|

||||||

|

# tab).

|

||||||

|

indent-string=' '

|

||||||

|

|

||||||

|

# Maximum number of characters on a single line.

|

||||||

|

max-line-length=120

|

||||||

|

|

||||||

|

# Maximum number of lines in a module.

|

||||||

|

max-module-lines=1000

|

||||||

|

|

||||||

|

# Allow the body of a class to be on the same line as the declaration if body

|

||||||

|

# contains single statement.

|

||||||

|

single-line-class-stmt=no

|

||||||

|

|

||||||

|

# Allow the body of an if to be on the same line as the test if there is no

|

||||||

|

# else.

|

||||||

|

single-line-if-stmt=no

|

||||||

|

|

||||||

|

|

||||||

|

[SIMILARITIES]

|

||||||

|

|

||||||

|

# Ignore comments when computing similarities.

|

||||||

|

ignore-comments=yes

|

||||||

|

|

||||||

|

# Ignore docstrings when computing similarities.

|

||||||

|

ignore-docstrings=yes

|

||||||

|

|

||||||

|

# Ignore imports when computing similarities.

|

||||||

|

ignore-imports=no

|

||||||

|

|

||||||

|

# Minimum lines number of a similarity.

|

||||||

|

min-similarity-lines=4

|

||||||

|

|

||||||

|

|

||||||

|

[BASIC]

|

||||||

|

|

||||||

|

# Naming style matching correct argument names.

|

||||||

|

argument-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct argument names. Overrides argument-

|

||||||

|

# naming-style.

|

||||||

|

argument-rgx=[a-z_][a-z0-9_]{0,30}$

|

||||||

|

|

||||||

|

# Naming style matching correct attribute names.

|

||||||

|

attr-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct attribute names. Overrides attr-naming-

|

||||||

|

# style.

|

||||||

|

#attr-rgx=

|

||||||

|

|

||||||

|

# Bad variable names which should always be refused, separated by a comma.

|

||||||

|

bad-names=

|

||||||

|

|

||||||

|

# Naming style matching correct class attribute names.

|

||||||

|

class-attribute-naming-style=any

|

||||||

|

|

||||||

|

# Regular expression matching correct class attribute names. Overrides class-

|

||||||

|

# attribute-naming-style.

|

||||||

|

#class-attribute-rgx=

|

||||||

|

|

||||||

|

# Naming style matching correct class names.

|

||||||

|

class-naming-style=PascalCase

|

||||||

|

|

||||||

|

# Regular expression matching correct class names. Overrides class-naming-

|

||||||

|

# style.

|

||||||

|

#class-rgx=

|

||||||

|

|

||||||

|

# Naming style matching correct constant names.

|

||||||

|

const-naming-style=UPPER_CASE

|

||||||

|

|

||||||

|

# Regular expression matching correct constant names. Overrides const-naming-

|

||||||

|

# style.

|

||||||

|

#const-rgx=

|

||||||

|

|

||||||

|

# Minimum line length for functions/classes that require docstrings, shorter

|

||||||

|

# ones are exempt.

|

||||||

|

docstring-min-length=-1

|

||||||

|

|

||||||

|

# Naming style matching correct function names.

|

||||||

|

function-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct function names. Overrides function-

|

||||||

|

# naming-style.

|

||||||

|

#function-rgx=

|

||||||

|

|

||||||

|

# Good variable names which should always be accepted, separated by a comma.

|

||||||

|

good-names=i,

|

||||||

|

j,

|

||||||

|

k,

|

||||||

|

x,

|

||||||

|

ex,

|

||||||

|

Run,

|

||||||

|

_

|

||||||

|

|

||||||

|

# Include a hint for the correct naming format with invalid-name.

|

||||||

|

include-naming-hint=no

|

||||||

|

|

||||||

|

# Naming style matching correct inline iteration names.

|

||||||

|

inlinevar-naming-style=any

|

||||||

|

|

||||||

|

# Regular expression matching correct inline iteration names. Overrides

|

||||||

|

# inlinevar-naming-style.

|

||||||

|

#inlinevar-rgx=

|

||||||

|

|

||||||

|

# Naming style matching correct method names.

|

||||||

|

method-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct method names. Overrides method-naming-

|

||||||

|

# style.

|

||||||

|

#method-rgx=

|

||||||

|

|

||||||

|

# Naming style matching correct module names.

|

||||||

|

module-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct module names. Overrides module-naming-

|

||||||

|

# style.

|

||||||

|

#module-rgx=

|

||||||

|

|

||||||

|

# Colon-delimited sets of names that determine each other's naming style when

|

||||||

|

# the name regexes allow several styles.

|

||||||

|

name-group=

|

||||||

|

|

||||||

|

# Regular expression which should only match function or class names that do

|

||||||

|

# not require a docstring.

|

||||||

|

no-docstring-rgx=^_

|

||||||

|

|

||||||

|

# List of decorators that produce properties, such as abc.abstractproperty. Add

|

||||||

|

# to this list to register other decorators that produce valid properties.

|

||||||

|

# These decorators are taken in consideration only for invalid-name.

|

||||||

|

property-classes=abc.abstractproperty

|

||||||

|

|

||||||

|

# Naming style matching correct variable names.

|

||||||

|

variable-naming-style=snake_case

|

||||||

|

|

||||||

|

# Regular expression matching correct variable names. Overrides variable-

|

||||||

|

# naming-style.

|

||||||

|

variable-rgx=[a-z_][a-z0-9_]{0,30}$

|

||||||

|

|

||||||

|

|

||||||

|

[STRING]

|

||||||

|

|

||||||

|

# This flag controls whether the implicit-str-concat-in-sequence should

|

||||||

|

# generate a warning on implicit string concatenation in sequences defined over

|

||||||

|

# several lines.

|

||||||

|

check-str-concat-over-line-jumps=no

|

||||||

|

|

||||||

|

|

||||||

|

[IMPORTS]

|

||||||

|

|

||||||

|

# Allow wildcard imports from modules that define __all__.

|

||||||

|

allow-wildcard-with-all=no

|

||||||

|

|

||||||

|

# Analyse import fallback blocks. This can be used to support both Python 2 and

|

||||||

|

# 3 compatible code, which means that the block might have code that exists

|

||||||

|

# only in one or another interpreter, leading to false positives when analysed.

|

||||||

|

analyse-fallback-blocks=no

|

||||||

|

|

||||||

|

# Deprecated modules which should not be used, separated by a comma.

|

||||||

|

deprecated-modules=optparse,tkinter.tix

|

||||||

|

|

||||||

|

# Create a graph of external dependencies in the given file (report RP0402 must

|

||||||

|

# not be disabled).

|

||||||

|

ext-import-graph=

|

||||||

|

|

||||||

|

# Create a graph of every (i.e. internal and external) dependencies in the

|

||||||

|

# given file (report RP0402 must not be disabled).

|

||||||

|

import-graph=

|

||||||

|

|

||||||

|

# Create a graph of internal dependencies in the given file (report RP0402 must

|

||||||

|

# not be disabled).

|

||||||

|

int-import-graph=

|

||||||

|

|

||||||

|

# Force import order to recognize a module as part of the standard

|

||||||

|

# compatibility libraries.

|

||||||

|

known-standard-library=

|

||||||

|

|

||||||

|

# Force import order to recognize a module as part of a third party library.

|

||||||

|

known-third-party=enchant

|

||||||

|

|

||||||

|

|

||||||

|

[CLASSES]

|

||||||

|

|

||||||

|

# List of method names used to declare (i.e. assign) instance attributes.

|

||||||

|

defining-attr-methods=__init__,

|

||||||

|

__new__,

|

||||||

|

setUp

|

||||||

|

|

||||||

|

# List of member names, which should be excluded from the protected access

|

||||||

|

# warning.

|

||||||

|

exclude-protected=_asdict,

|

||||||

|

_fields,

|

||||||

|

_replace,

|

||||||

|

_source,

|

||||||

|

_make

|

||||||

|

|

||||||

|

# List of valid names for the first argument in a class method.

|

||||||

|

valid-classmethod-first-arg=cls

|

||||||

|

|

||||||

|

# List of valid names for the first argument in a metaclass class method.

|

||||||

|

valid-metaclass-classmethod-first-arg=cls

|

||||||

|

|

||||||

|

|

||||||

|

[DESIGN]

|

||||||

|

|

||||||

|

# Maximum number of arguments for function / method.

|

||||||

|

max-args=5

|

||||||

|

|

||||||

|

# Maximum number of attributes for a class (see R0902).

|

||||||

|

max-attributes=7

|

||||||

|

|

||||||

|

# Maximum number of boolean expressions in an if statement.

|

||||||

|

max-bool-expr=5

|

||||||

|

|

||||||

|

# Maximum number of branch for function / method body.

|

||||||

|

max-branches=12

|

||||||

|

|

||||||

|

# Maximum number of locals for function / method body.

|

||||||

|

max-locals=15

|

||||||

|

|

||||||

|

# Maximum number of parents for a class (see R0901).

|

||||||

|

max-parents=15

|

||||||

|

|

||||||

|

# Maximum number of public methods for a class (see R0904).

|

||||||

|

max-public-methods=20

|

||||||

|

|

||||||

|

# Maximum number of return / yield for function / method body.

|

||||||

|

max-returns=6

|

||||||

|

|

||||||

|

# Maximum number of statements in function / method body.

|

||||||

|

max-statements=50

|

||||||

|

|

||||||

|

# Minimum number of public methods for a class (see R0903).

|

||||||

|

min-public-methods=2

|

||||||

|

|

||||||

|

|

||||||

|

[EXCEPTIONS]

|

||||||

|

|

||||||

|

# Exceptions that will emit a warning when being caught. Defaults to

|

||||||

|

# "BaseException, Exception".

|

||||||

|

overgeneral-exceptions=builtins.BaseException,

|

||||||

|

builtins.Exception

|

||||||

14

MANIFEST.in

Normal file

14

MANIFEST.in

Normal file

@@ -0,0 +1,14 @@

|

|||||||

|

include README.md

|

||||||

|

include LICENSE.txt

|

||||||

|

include requirements.*.txt

|

||||||

|

include *.cff

|

||||||

|

include requirements.txt

|

||||||

|

include matcha/VERSION

|

||||||

|

recursive-include matcha *.json

|

||||||

|

recursive-include matcha *.html

|

||||||

|

recursive-include matcha *.png

|

||||||

|

recursive-include matcha *.md

|

||||||

|

recursive-include matcha *.py

|

||||||

|

recursive-include matcha *.pyx

|

||||||

|

recursive-exclude tests *

|

||||||

|

prune tests*

|

||||||

42

Makefile

Normal file

42

Makefile

Normal file

@@ -0,0 +1,42 @@

|

|||||||

|

|

||||||

|

help: ## Show help

|

||||||

|

@grep -E '^[.a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[36m%-30s\033[0m %s\n", $$1, $$2}'

|

||||||

|

|

||||||

|

clean: ## Clean autogenerated files

|

||||||

|

rm -rf dist

|

||||||

|

find . -type f -name "*.DS_Store" -ls -delete

|

||||||

|

find . | grep -E "(__pycache__|\.pyc|\.pyo)" | xargs rm -rf

|

||||||

|

find . | grep -E ".pytest_cache" | xargs rm -rf

|

||||||

|

find . | grep -E ".ipynb_checkpoints" | xargs rm -rf

|

||||||

|

rm -f .coverage

|

||||||

|

|

||||||

|

clean-logs: ## Clean logs

|

||||||

|

rm -rf logs/**

|

||||||

|

|

||||||

|

create-package: ## Create wheel and tar gz

|

||||||

|

rm -rf dist/

|

||||||

|

python setup.py bdist_wheel --plat-name=manylinux1_x86_64

|

||||||

|

python setup.py sdist

|

||||||

|

python -m twine upload dist/* --verbose --skip-existing

|

||||||

|

|

||||||

|

format: ## Run pre-commit hooks

|

||||||

|

pre-commit run -a

|

||||||

|

|

||||||

|

sync: ## Merge changes from main branch to your current branch

|

||||||

|

git pull

|

||||||

|

git pull origin main

|

||||||

|

|

||||||

|

test: ## Run not slow tests

|

||||||

|

pytest -k "not slow"

|

||||||

|

|

||||||

|

test-full: ## Run all tests

|

||||||

|

pytest

|

||||||

|

|

||||||

|

train-ljspeech: ## Train the model

|

||||||

|

python matcha/train.py experiment=ljspeech

|

||||||

|

|

||||||

|

train-ljspeech-min: ## Train the model with minimum memory

|

||||||

|

python matcha/train.py experiment=ljspeech_min_memory

|

||||||

|

|

||||||

|

start_app: ## Start the app

|

||||||

|

python matcha/app.py

|

||||||

315

README.md

Normal file

315

README.md

Normal file

@@ -0,0 +1,315 @@

|

|||||||

|

<div align="center">

|

||||||

|

|

||||||

|

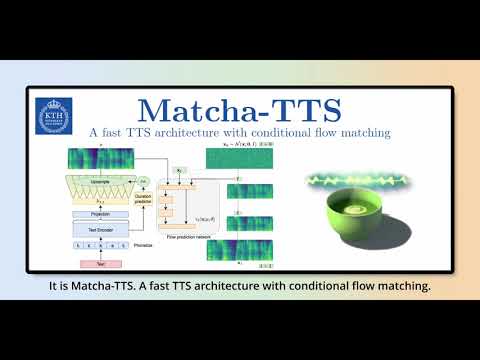

# 🍵 Matcha-TTS: A fast TTS architecture with conditional flow matching

|

||||||

|

|

||||||

|

### [Shivam Mehta](https://www.kth.se/profile/smehta), [Ruibo Tu](https://www.kth.se/profile/ruibo), [Jonas Beskow](https://www.kth.se/profile/beskow), [Éva Székely](https://www.kth.se/profile/szekely), and [Gustav Eje Henter](https://people.kth.se/~ghe/)

|

||||||

|

|

||||||

|

[](https://www.python.org/downloads/release/python-3100/)

|

||||||

|

[](https://pytorch.org/get-started/locally/)

|

||||||

|

[](https://pytorchlightning.ai/)

|

||||||

|

[](https://hydra.cc/)

|

||||||

|

[](https://black.readthedocs.io/en/stable/)

|

||||||

|

[](https://pycqa.github.io/isort/)

|

||||||

|

[](https://pepy.tech/projects/matcha-tts)

|

||||||

|

<p style="text-align: center;">

|

||||||

|

<img src="https://shivammehta25.github.io/Matcha-TTS/images/logo.png" height="128"/>

|

||||||

|

</p>

|

||||||

|

|

||||||

|

</div>

|

||||||

|

|

||||||

|

> This is the official code implementation of 🍵 Matcha-TTS [ICASSP 2024].

|

||||||

|

|

||||||

|

We propose 🍵 Matcha-TTS, a new approach to non-autoregressive neural TTS, that uses [conditional flow matching](https://arxiv.org/abs/2210.02747) (similar to [rectified flows](https://arxiv.org/abs/2209.03003)) to speed up ODE-based speech synthesis. Our method:

|

||||||

|

|

||||||

|

- Is probabilistic

|

||||||

|

- Has compact memory footprint

|

||||||

|

- Sounds highly natural

|

||||||

|

- Is very fast to synthesise from

|

||||||

|

|

||||||

|

Check out our [demo page](https://shivammehta25.github.io/Matcha-TTS) and read [our ICASSP 2024 paper](https://arxiv.org/abs/2309.03199) for more details.

|

||||||

|

|

||||||

|

[Pre-trained models](https://drive.google.com/drive/folders/17C_gYgEHOxI5ZypcfE_k1piKCtyR0isJ?usp=sharing) will be automatically downloaded with the CLI or gradio interface.

|

||||||

|

|

||||||

|

You can also [try 🍵 Matcha-TTS in your browser on HuggingFace 🤗 spaces](https://huggingface.co/spaces/shivammehta25/Matcha-TTS).

|

||||||

|

|

||||||

|

## Teaser video

|

||||||

|

|

||||||

|

[](https://youtu.be/xmvJkz3bqw0)

|

||||||

|

|

||||||

|

## Installation

|

||||||

|

|

||||||

|

1. Create an environment (suggested but optional)

|

||||||

|

|

||||||

|

```

|

||||||

|

conda create -n matcha-tts python=3.10 -y

|

||||||

|

conda activate matcha-tts

|

||||||

|

```

|

||||||

|

|

||||||

|

2. Install Matcha TTS using pip or from source

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install matcha-tts

|

||||||

|

```

|

||||||

|

|

||||||

|

from source

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install git+https://github.com/shivammehta25/Matcha-TTS.git

|

||||||

|

cd Matcha-TTS

|

||||||

|

pip install -e .

|

||||||

|

```

|

||||||

|

|

||||||

|

3. Run CLI / gradio app / jupyter notebook

|

||||||

|

|

||||||

|

```bash

|

||||||

|

# This will download the required models

|

||||||

|

matcha-tts --text "<INPUT TEXT>"

|

||||||

|

```

|

||||||

|

|

||||||

|

or

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts-app

|

||||||

|

```

|

||||||

|

|

||||||

|

or open `synthesis.ipynb` on jupyter notebook

|

||||||

|

|

||||||

|

### CLI Arguments

|

||||||

|

|

||||||

|

- To synthesise from given text, run:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --text "<INPUT TEXT>"

|

||||||

|

```

|

||||||

|

|

||||||

|

- To synthesise from a file, run:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --file <PATH TO FILE>

|

||||||

|

```

|

||||||

|

|

||||||

|

- To batch synthesise from a file, run:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --file <PATH TO FILE> --batched

|

||||||

|

```

|

||||||

|

|

||||||

|

Additional arguments

|

||||||

|

|

||||||

|

- Speaking rate

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --text "<INPUT TEXT>" --speaking_rate 1.0

|

||||||

|

```

|

||||||

|

|

||||||

|

- Sampling temperature

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --text "<INPUT TEXT>" --temperature 0.667

|

||||||

|

```

|

||||||

|

|

||||||

|

- Euler ODE solver steps

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --text "<INPUT TEXT>" --steps 10

|

||||||

|

```

|

||||||

|

|

||||||

|

## Train with your own dataset

|

||||||

|

|

||||||

|

Let's assume we are training with LJ Speech

|

||||||

|

|

||||||

|

1. Download the dataset from [here](https://keithito.com/LJ-Speech-Dataset/), extract it to `data/LJSpeech-1.1`, and prepare the file lists to point to the extracted data like for [item 5 in the setup of the NVIDIA Tacotron 2 repo](https://github.com/NVIDIA/tacotron2#setup).

|

||||||

|

|

||||||

|

2. Clone and enter the Matcha-TTS repository

|

||||||

|

|

||||||

|

```bash

|

||||||

|

git clone https://github.com/shivammehta25/Matcha-TTS.git

|

||||||

|

cd Matcha-TTS

|

||||||

|

```

|

||||||

|

|

||||||

|

3. Install the package from source

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install -e .

|

||||||

|

```

|

||||||

|

|

||||||

|

4. Go to `configs/data/ljspeech.yaml` and change

|

||||||

|

|

||||||

|

```yaml

|

||||||

|

train_filelist_path: data/filelists/ljs_audio_text_train_filelist.txt

|

||||||

|

valid_filelist_path: data/filelists/ljs_audio_text_val_filelist.txt

|

||||||

|

```

|

||||||

|

|

||||||

|

5. Generate normalisation statistics with the yaml file of dataset configuration

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-data-stats -i ljspeech.yaml

|

||||||

|

# Output:

|

||||||

|

#{'mel_mean': -5.53662231756592, 'mel_std': 2.1161014277038574}

|

||||||

|

```

|

||||||

|

|

||||||

|

Update these values in `configs/data/ljspeech.yaml` under `data_statistics` key.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

data_statistics: # Computed for ljspeech dataset

|

||||||

|

mel_mean: -5.536622

|

||||||

|

mel_std: 2.116101

|

||||||

|

```

|

||||||

|

|

||||||

|

to the paths of your train and validation filelists.

|

||||||

|

|

||||||

|

6. Run the training script

|

||||||

|

|

||||||

|

```bash

|

||||||

|

make train-ljspeech

|

||||||

|

```

|

||||||

|

|

||||||

|

or

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python matcha/train.py experiment=ljspeech

|

||||||

|

```

|

||||||

|

|

||||||

|

- for a minimum memory run

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python matcha/train.py experiment=ljspeech_min_memory

|

||||||

|

```

|

||||||

|

|

||||||

|

- for multi-gpu training, run

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python matcha/train.py experiment=ljspeech trainer.devices=[0,1]

|

||||||

|

```

|

||||||

|

|

||||||

|

7. Synthesise from the custom trained model

|

||||||

|

|

||||||

|

```bash

|

||||||

|

matcha-tts --text "<INPUT TEXT>" --checkpoint_path <PATH TO CHECKPOINT>

|

||||||

|

```

|

||||||

|

|

||||||

|

## ONNX support

|

||||||

|

|

||||||

|

> Special thanks to [@mush42](https://github.com/mush42) for implementing ONNX export and inference support.

|

||||||

|

|

||||||

|

It is possible to export Matcha checkpoints to [ONNX](https://onnx.ai/), and run inference on the exported ONNX graph.

|

||||||

|

|

||||||

|

### ONNX export

|

||||||

|

|

||||||

|

To export a checkpoint to ONNX, first install ONNX with

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install onnx

|

||||||

|

```

|

||||||

|

|

||||||

|

then run the following:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python3 -m matcha.onnx.export matcha.ckpt model.onnx --n-timesteps 5

|

||||||

|

```

|

||||||

|

|

||||||

|

Optionally, the ONNX exporter accepts **vocoder-name** and **vocoder-checkpoint** arguments. This enables you to embed the vocoder in the exported graph and generate waveforms in a single run (similar to end-to-end TTS systems).

|

||||||

|

|

||||||

|

**Note** that `n_timesteps` is treated as a hyper-parameter rather than a model input. This means you should specify it during export (not during inference). If not specified, `n_timesteps` is set to **5**.

|

||||||

|

|

||||||

|

**Important**: for now, torch>=2.1.0 is needed for export since the `scaled_product_attention` operator is not exportable in older versions. Until the final version is released, those who want to export their models must install torch>=2.1.0 manually as a pre-release.

|

||||||

|

|

||||||

|

### ONNX Inference

|

||||||

|

|

||||||

|

To run inference on the exported model, first install `onnxruntime` using

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install onnxruntime

|

||||||

|

pip install onnxruntime-gpu # for GPU inference

|

||||||

|

```

|

||||||

|

|

||||||

|

then use the following:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python3 -m matcha.onnx.infer model.onnx --text "hey" --output-dir ./outputs

|

||||||

|

```

|

||||||

|

|

||||||

|

You can also control synthesis parameters:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python3 -m matcha.onnx.infer model.onnx --text "hey" --output-dir ./outputs --temperature 0.4 --speaking_rate 0.9 --spk 0

|

||||||

|

```

|

||||||

|

|

||||||

|

To run inference on **GPU**, make sure to install **onnxruntime-gpu** package, and then pass `--gpu` to the inference command:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python3 -m matcha.onnx.infer model.onnx --text "hey" --output-dir ./outputs --gpu

|

||||||

|

```

|

||||||

|

|

||||||

|

If you exported only Matcha to ONNX, this will write mel-spectrogram as graphs and `numpy` arrays to the output directory.

|

||||||

|

If you embedded the vocoder in the exported graph, this will write `.wav` audio files to the output directory.

|

||||||

|

|

||||||

|

If you exported only Matcha to ONNX, and you want to run a full TTS pipeline, you can pass a path to a vocoder model in `ONNX` format:

|

||||||

|

|

||||||

|

```bash

|

||||||

|

python3 -m matcha.onnx.infer model.onnx --text "hey" --output-dir ./outputs --vocoder hifigan.small.onnx

|

||||||

|

```

|

||||||

|

|

||||||

|

This will write `.wav` audio files to the output directory.

|

||||||

|

|

||||||

|

## Extract phoneme alignments from Matcha-TTS

|

||||||

|

|

||||||

|

If the dataset is structured as

|

||||||

|

|

||||||

|

```bash

|

||||||

|

data/

|

||||||

|

└── LJSpeech-1.1

|

||||||

|

├── metadata.csv

|

||||||

|

├── README

|

||||||

|

├── test.txt

|

||||||

|

├── train.txt

|

||||||

|

├── val.txt

|

||||||

|

└── wavs

|

||||||

|

```