mirror of

https://github.com/snakers4/silero-vad.git

synced 2026-02-05 18:09:22 +08:00

207 lines

9.9 KiB

Markdown

207 lines

9.9 KiB

Markdown

[](mailto:hello@silero.ai) [](https://t.me/joinchat/Bv9tjhpdXTI22OUgpOIIDg) [](https://github.com/snakers4/silero-vad/blob/master/LICENSE)

|

|

|

|

[](https://pytorch.org/hub/snakers4_silero-vad/) (coming soon)

|

|

|

|

[](https://colab.research.google.com/github/snakers4/silero-vad/blob/master/silero-vad.ipynb)

|

|

|

|

|

|

|

|

- [Silero VAD](#silero-vad)

|

|

- [Getting Started](#getting-started)

|

|

- [PyTorch](#pytorch)

|

|

- [ONNX](#onnx)

|

|

- [Metrics](#metrics)

|

|

- [Performance Metrics](#performance-metrics)

|

|

- [VAD Quality Metrics](#vad-quality-metrics)

|

|

- [FAQ](#faq)

|

|

- [How VAD Works](#how-vad-works)

|

|

- [VAD Quality Metrics Methodology](#vad-quality-metrics-methodology)

|

|

- [How Number Detector Works](#how-number-detector-works)

|

|

- [How Language Classifier Works](#how-language-classifier-works)

|

|

- [Contact](#contact)

|

|

- [Get in Touch](#get-in-touch)

|

|

- [Commercial Inquiries](#commercial-inquiries)

|

|

|

|

|

|

# Silero VAD

|

|

|

|

|

|

|

|

**Silero VAD: pre-trained enterprise-grade Voice Activity Detector (VAD), Number Detector and Language Classifier.**

|

|

Enterprise-grade Speech Products made refreshingly simple (see our [STT](https://github.com/snakers4/silero-models) models).

|

|

|

|

Currently, there are hardly any high quality / modern / free / public voice activity detectors except for WebRTC Voice Activity Detector ([link](https://github.com/wiseman/py-webrtcvad)). WebRTC though starts to show its age and it suffers from many false positives.

|

|

|

|

Also in some cases it is crucial to be able to anonymize large-scale spoken corpora (i.e. remove personal data). Typically personal data is considered to be private / sensitive if it contains (i) a name (ii) some private ID. Name recognition is a highly subjective matter and it depends on locale and business case, but Voice Activity and Number Detection are quite general tasks.

|

|

|

|

**Key features:**

|

|

|

|

- Modern, portable;

|

|

- Low memory footprint;

|

|

- Superior metrics to WebRTC;

|

|

- Trained on huge spoken corpora and noise / sound libraries;

|

|

- Slower than WebRTC, but fast enough for IOT / edge / mobile applications;

|

|

- Unlike WebRTC (which mostly tells silence from voice), our VAD can tell voice from noise / music / silence;

|

|

|

|

**Typical use cases:**

|

|

|

|

- Spoken corpora anonymization;

|

|

- Can be used together with WebRTC;

|

|

- Voice activity detection for IOT / edge / mobile use cases;

|

|

- Data cleaning and preparation, number and voice detection in general;

|

|

- PyTorch and ONNX can be used with a wide variety of deployment options and backends in mind;

|

|

|

|

## Getting Started

|

|

|

|

The models are small enough to be included directly into this repository. Newer models will supersede older models directly.

|

|

|

|

Currently we provide the following functionality:

|

|

|

|

| PyTorch | ONNX | VAD | Number Detector | Language Clf | Languages | Colab |

|

|

|-------------------|--------------------|---------------------|-----------------|--------------|------------------------|-------|

|

|

| :heavy_check_mark:| :heavy_check_mark: | :heavy_check_mark: | | | `ru`, `en`, `de`, `es` | [](https://colab.research.google.com/github/snakers4/silero-vad/blob/master/silero-vad.ipynb) |

|

|

|

|

**Version history:**

|

|

|

|

| Version | Date | Comment |

|

|

|---------|-------------|---------------------------------------------------|

|

|

| `v1` | 2020-12-15 | Initial release |

|

|

| `v2` | coming soon | Add Number Detector or Language Classifier heads, lift 250 ms chunk VAD limitation |

|

|

|

|

### PyTorch

|

|

|

|

[](https://colab.research.google.com/github/snakers4/silero-vad/blob/master/silero-vad.ipynb)

|

|

|

|

[](https://pytorch.org/hub/snakers4_silero-vad/) (coming soon)

|

|

```python

|

|

import torch

|

|

torch.set_num_threads(1)

|

|

from pprint import pprint

|

|

|

|

model, utils = torch.hub.load(repo_or_dir='snakers4/silero-vad',

|

|

model='silero_vad',

|

|

force_reload=True)

|

|

|

|

(get_speech_ts,

|

|

_, read_audio,

|

|

_, _, _) = utils

|

|

|

|

files_dir = torch.hub.get_dir() + '/snakers4_silero-vad_master/files'

|

|

|

|

wav = read_audio(f'{files_dir}/en.wav')

|

|

# full audio

|

|

# get speech timestamps from full audio file

|

|

speech_timestamps = get_speech_ts(wav, model,

|

|

num_steps=4)

|

|

pprint(speech_timestamps)

|

|

```

|

|

### ONNX

|

|

|

|

[](https://colab.research.google.com/github/snakers4/silero-vad/blob/master/silero-vad.ipynb)

|

|

|

|

You can run our model everywhere, where you can import the ONNX model or run ONNX runtime.

|

|

```python

|

|

import onnxruntime

|

|

from pprint import pprint

|

|

|

|

_, utils = torch.hub.load(repo_or_dir='snakers4/silero-vad',

|

|

model='silero_vad',

|

|

force_reload=True)

|

|

|

|

(get_speech_ts,

|

|

_, read_audio,

|

|

_, _, _) = utils

|

|

|

|

files_dir = torch.hub.get_dir() + '/snakers4_silero-vad_master/files'

|

|

|

|

def init_onnx_model(model_path: str):

|

|

return onnxruntime.InferenceSession(model_path)

|

|

|

|

def validate_onnx(model, inputs):

|

|

with torch.no_grad():

|

|

ort_inputs = {'input': inputs.cpu().numpy()}

|

|

outs = model.run(None, ort_inputs)

|

|

outs = [torch.Tensor(x) for x in outs]

|

|

return outs

|

|

|

|

model = init_onnx_model(f'{files_dir}/model.onnx')

|

|

wav = read_audio(f'{files_dir}/en.wav')

|

|

|

|

# get speech timestamps from full audio file

|

|

speech_timestamps = get_speech_ts(wav, model, num_steps=4, run_function=validate_onnx)

|

|

pprint(speech_timestamps)

|

|

```

|

|

|

|

## Metrics

|

|

|

|

### Performance Metrics

|

|

|

|

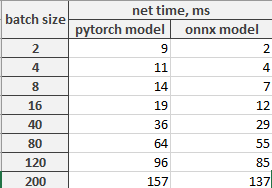

All speed test were made on SPECS using 1 thread

|

|

```

|

|

torch.set_num_threads(1) # pytorch

|

|

ort_session.intra_op_num_threads = 1 # onnx

|

|

ort_session.inter_op_num_threads = 1 # onnx

|

|

```

|

|

|

|

#### Streaming speed

|

|

|

|

Streaming speed depends on 2 variables:

|

|

|

|

- **num_steps** - number of windows to split audio chunk by. Our postprocessing class keeps previous chunk in memory (250 ms), so new chunk (also 250 ms) appends to it, resulting big chunk (500 ms) is split into **num_steps** overlap windows, each 250 ms long.

|

|

|

|

- **number of audio streams**

|

|

|

|

So **batch size** for streaming is **num_steps * number of audio streams**. Time between receiving new audio chunks from stream and getting results are shown in picture:

|

|

|

|

|

|

|

|

|

|

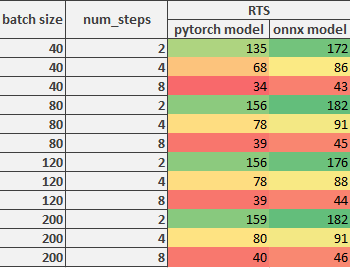

#### Full audio processing speed

|

|

|

|

**RTS** (real time speed) for full audio processing depends on **num_steps** (see previous paragraph) and **batch size** (bigger is better)

|

|

|

|

|

|

|

|

### VAD Quality Metrics

|

|

|

|

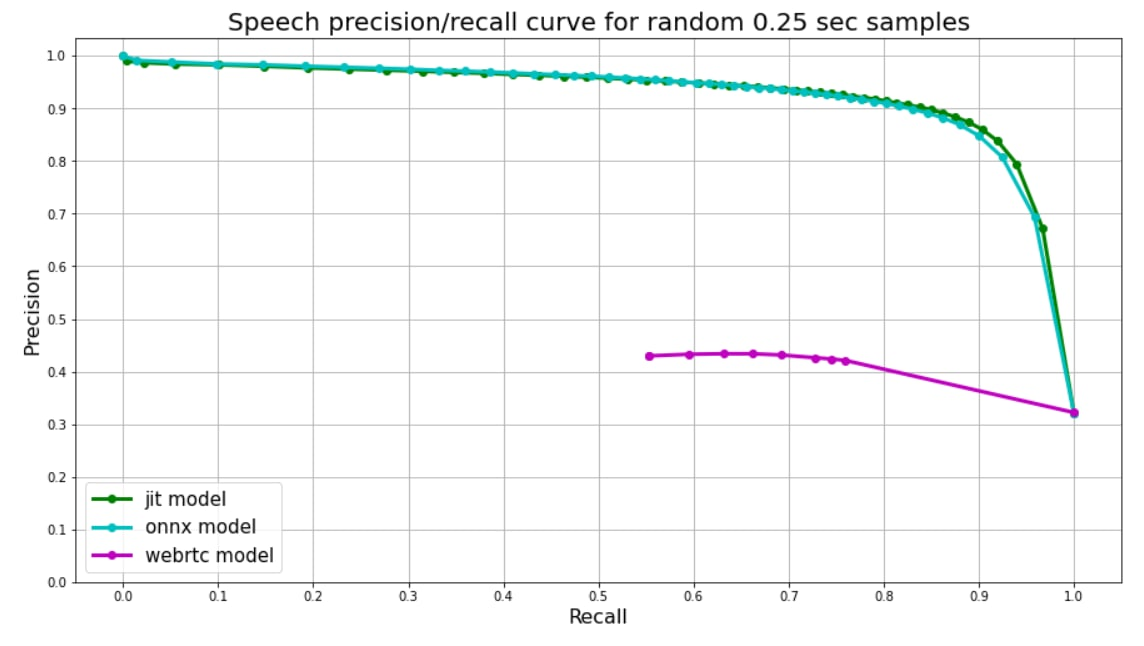

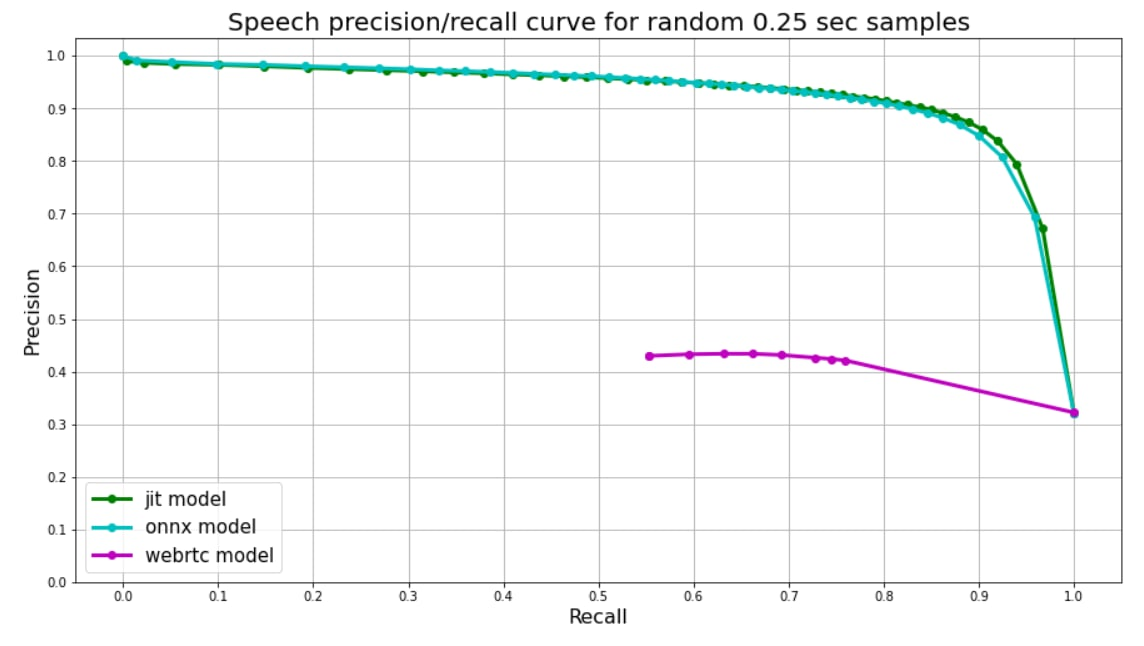

We use random 250 ms audio chunks for validation. Speech to non-speech ratio among chunks is about ~50/50 (i.e. balanced). Speech chunks are sampled from real audios in four different languages (English, Russian, Spanish, German), then random background noise is added to some of them (~40%).

|

|

|

|

Since our VAD (only VAD, other networks are more flexible) was trained on chunks of the same length, model's output is just one float from 0 to 1 - **speech probability**. We use speech probabilities as thresholds for precision-recall curve. This can be extended to 100 - 150 ms (coming soon). Less than 100 - 150 ms cannot be distinguished as speech with confidence.

|

|

|

|

[Webrtc](https://github.com/wiseman/py-webrtcvad) splits audio into frames, each frame has corresponding number (0 **or** 1). We use 30ms frames for webrtc, so each 250 ms chunk is split into 8 frames, their **mean** value is used as a treshold for plot.

|

|

|

|

|

|

|

|

## FAQ

|

|

|

|

### How VAD Works

|

|

|

|

- Audio is split into 250 ms chunks;

|

|

- VAD keeps record of a previous chunk (or zeros at the beginning of the stream);

|

|

- Then this 500 ms audio (250 ms + 250 ms) is split into N (typically 4 or 8) windows and the model is applied to this window batch. Each window is 250 ms long (naturally, windows overlap);

|

|

- Then probability is averaged across these windows;

|

|

- Though typically pauses in speech are 300 ms+ or longer (pauses less than 200-300ms are typically not meaninful), it is hard to confidently classify speech vs noise / music on very short chunks (i.e. 30 - 50ms);

|

|

- We are working on lifting this limitation, so that you can use 100 - 125ms windows;

|

|

|

|

### VAD Quality Metrics Methodology

|

|

|

|

Please see [Quality Metrics](#quality-metrics)

|

|

|

|

### How Number Detector Works

|

|

|

|

TBD, but there is no explicit limiation on the way audio is split into chunks.

|

|

|

|

### How Language Classifier Works

|

|

|

|

TBD, but there is no explicit limiation on the way audio is split into chunks.

|

|

|

|

## Contact

|

|

|

|

### Get in Touch

|

|

|

|

Try our models, create an [issue](https://github.com/snakers4/silero-vad/issues/new), start a [discussion](https://github.com/snakers4/silero-vad/discussions/new), join our telegram [chat](https://t.me/joinchat/Bv9tjhpdXTI22OUgpOIIDg), [email](mailto:hello@silero.ai) us.

|

|

|

|

### Commercial Inquiries

|

|

|

|

Please see our [wiki](https://github.com/snakers4/silero-models/wiki) and [tiers](https://github.com/snakers4/silero-models/wiki/Licensing-and-Tiers) for relevant information and [email](mailto:hello@silero.ai) us directly.

|