**Large multi-modal models for strong performance and efficient deployment**

OmniLMM-3B 🤗 🤖 |

OmniLMM-12B 🤗 🤖

**OmniLMM** is a family of open-source large multimodal models (LMMs) adept at vision & language modeling. The model processes images and text inputs and delivers high-quality text outputs. We release two featured versions of OmniLMM that are targeted at **strong performance and efficient deployment**:

- **OmniLMM-12B**: Leading performance among comparable-sized models on multiple benchmarks.

- **OmniLMM-3B**: Frontier end device multi-modal conversation with promising performance.

[中文文档](./README_zh.md)

## Contents

- [Contents](#contents)

- [OmniLMM-12B](#omnilmm-12b)

- [Evaluation](#evaluation)

- [Examples](#examples)

- [OmniLMM-3B](#omnilmm-3b)

- [Evaluation](#evaluation-1)

- [Examples](#examples-1)

- [Demo](#demo)

- [Install](#install)

- [Inference](#inference)

- [Model Zoo](#model-zoo)

- [Multi-turn Conversation](#multi-turn-conversation)

- [✅ TODO](#-todo)

- [Model License](#model-license)

- [Statement](#statement)

- [🏫 Institutions](#-institutions)

## OmniLMM-12B

**OmniLMM-12B** is the most capable version. The model is built based on EVA02-5B and Zephyr-7B-β, connected with a perceiver resampler layer, and trained on multimodal data in a curriculum fashion. The model has three notable features:

- 🔥 **Strong Performance.**

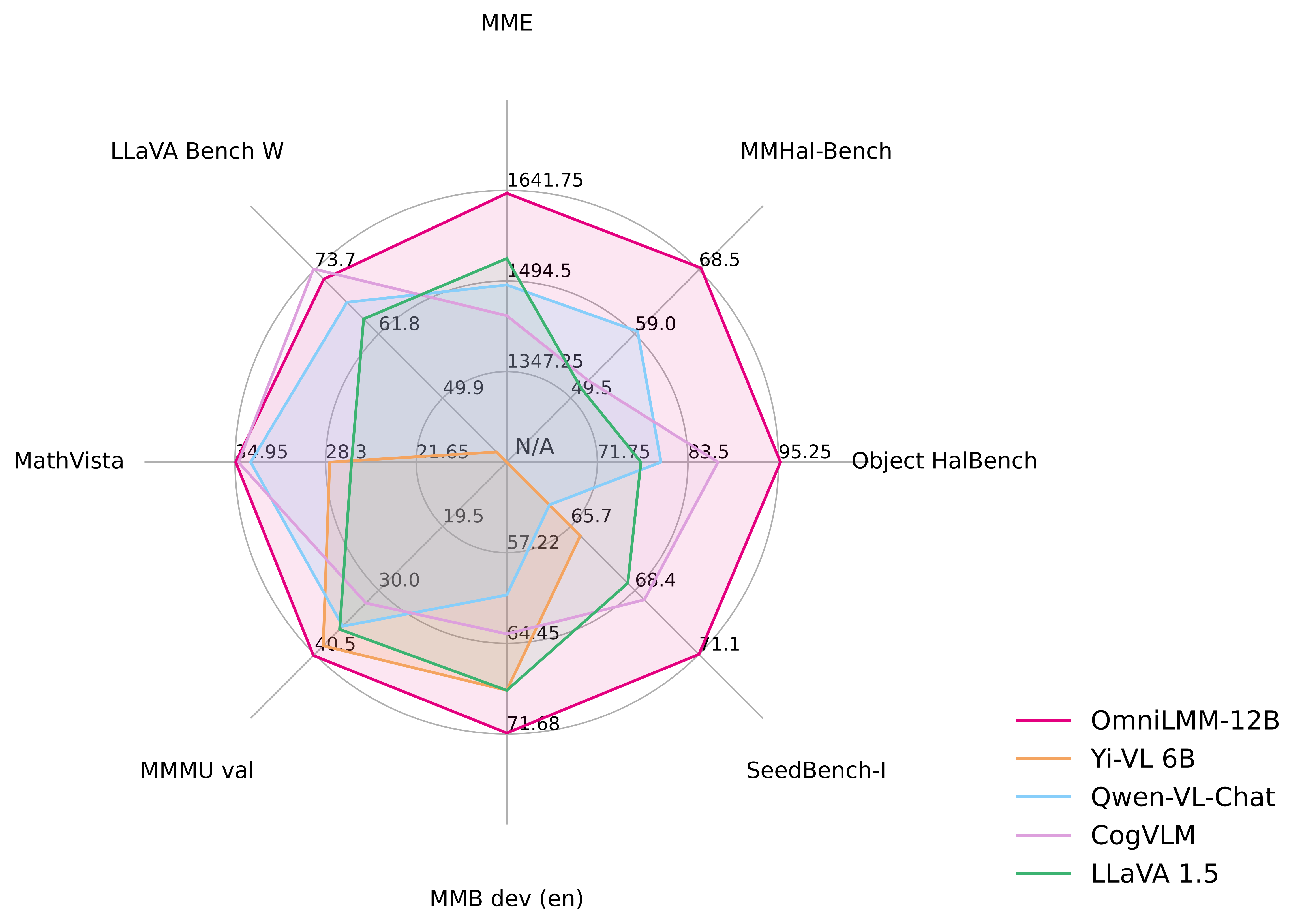

OmniLMM-12B achieves **leading performance** among models with comparable sizes, surpassing established LMMs on multiple benchmarks (including MME, MMBench, SEED-Bench, etc). The model also endows rich multi-modal world knowledge.

- 🏆 **Trustworthy Behavior.**

LMMs are known for suffering from hallucination, often generating text that is not factually grounded in images (e.g., faithfully describing non-existing objects in images). OmniLMM-12B is **the first state-of-the-art open-source LMM aligned via multimodal RLHF for trustworthy behavior** (using our recent [RLHF-V](https://rlhf-v.github.io/) technique). It **ranks #1** among open-source models on [MMHal-Bench](https://huggingface.co/datasets/Shengcao1006/MMHal-Bench), and **outperforms GPT-4V** on [Object HalBench](https://arxiv.org/abs/2312.00849).

- 🕹 **Real-time Multimodal Interaction.**

We combine the OmniLMM-12B and GPT-3.5 (text-only) into a **real-time multimodal interactive assistant**. The assistant accepts video streams from the camera and speech streams from the microphone and emits speech output. While still primary, we find the model can **replicate some of the fun cases shown in the Gemini Demo video, without any video edition**.

### Evaluation

**Large multi-modal models for strong performance and efficient deployment**

**Large multi-modal models for strong performance and efficient deployment**

**Large multi-modal models for strong performance and efficient deployment**

**Large multi-modal models for strong performance and efficient deployment**

](https://modelscope.cn/models/OpenBMB/OmniLMM-12B/files) |

| OmniLMM-3B | The efficient version for end device deployment. | [🤗](https://huggingface.co/openbmb/MiniCPM-V) [

](https://modelscope.cn/models/OpenBMB/OmniLMM-12B/files) |

| OmniLMM-3B | The efficient version for end device deployment. | [🤗](https://huggingface.co/openbmb/MiniCPM-V) [ ](https://modelscope.cn/models/OpenBMB/MiniCPM-V/files) |

### Multi-turn Conversation

Please refer to the following codes to run `OmniLMM`.

](https://modelscope.cn/models/OpenBMB/MiniCPM-V/files) |

### Multi-turn Conversation

Please refer to the following codes to run `OmniLMM`.

[THUNLP](https://nlp.csai.tsinghua.edu.cn/)

-

[THUNLP](https://nlp.csai.tsinghua.edu.cn/)

-  [ModelBest](https://modelbest.cn/)

-

[ModelBest](https://modelbest.cn/)

-  [Zhihu](https://www.zhihu.com/ )

[Zhihu](https://www.zhihu.com/ )